TLDR; Never use field Injection in Spring, scope APIs well, and do not mock value objects.

As this story starts, I was the system responsible for an old application which was being replaced. The replacing application was made by an external team (largely outsourced and supposedly seniors), but once in production, the new system would become part of the responsibility of the maintenance team I was on.

Beginning this transition, I started looking at the new system. My initial impression was good. The database schema was cleaned up compared to the old system. The project seemed more organized. It had a DB migrations system set up. A big improvement compared to what it was replacing.

To get more accustomed to new application, I started looking at simple bugs. My first bug was a simple mapping error towards an external system. I quickly found the offending code, and changed the implementation. My change should have broken some tests, but instead of assertion failures I kept getting weird NPEs from data that could not possibly be null.

Testing the tests

Weirded out, I started looking into the test suite. The tests all looked something like this:

class MapperTest {

@InjectMocks

Mapper mapper;

@Mock

SubMapper mockedSubMapper;

@Mock

SubMapper2 mockedSubMapper2;

@Mock

NuValue next;

@Mock

Value value;

public void testMock() {

when(value.getFoo()).thenReturn("Foo");

when(value.getBar()).thenReturn("Bar");

when(mockedSubMapper.map(any())).thenReturn(next);

when(mockedSubMapper2.map(any())).thenReturn(next);

NuValue nu = mapper.mapMaiStuff(value);

assertNonNull(nu);

}

}

What does this do:

@InjectMocks will when hit by the MockitoRunner, instantiate the @InjectMocks annotated class, grab the relevant @Mock classes and add them to the @InjectMocks annotated one.- All the

when().thenReturn() then create the mock behavior as well as build return values. Essential re implementing or ignoring most of the behavior that should be under test.

Even this simplified example is hard to read, but the tests I was dealing with, had when() mock statements that went on and on, and there were from 2-10 mocked dependencies, and several levels of mocked value objects.

What does this have to do with dependency injection and Spring? Is it not excessive mocking that is the problem?

The responsibility for this mess lies with the developers, but I think it is interesting to try to understand how they ended up in this dark alley. I think mocking is a symptom and not a cause here.

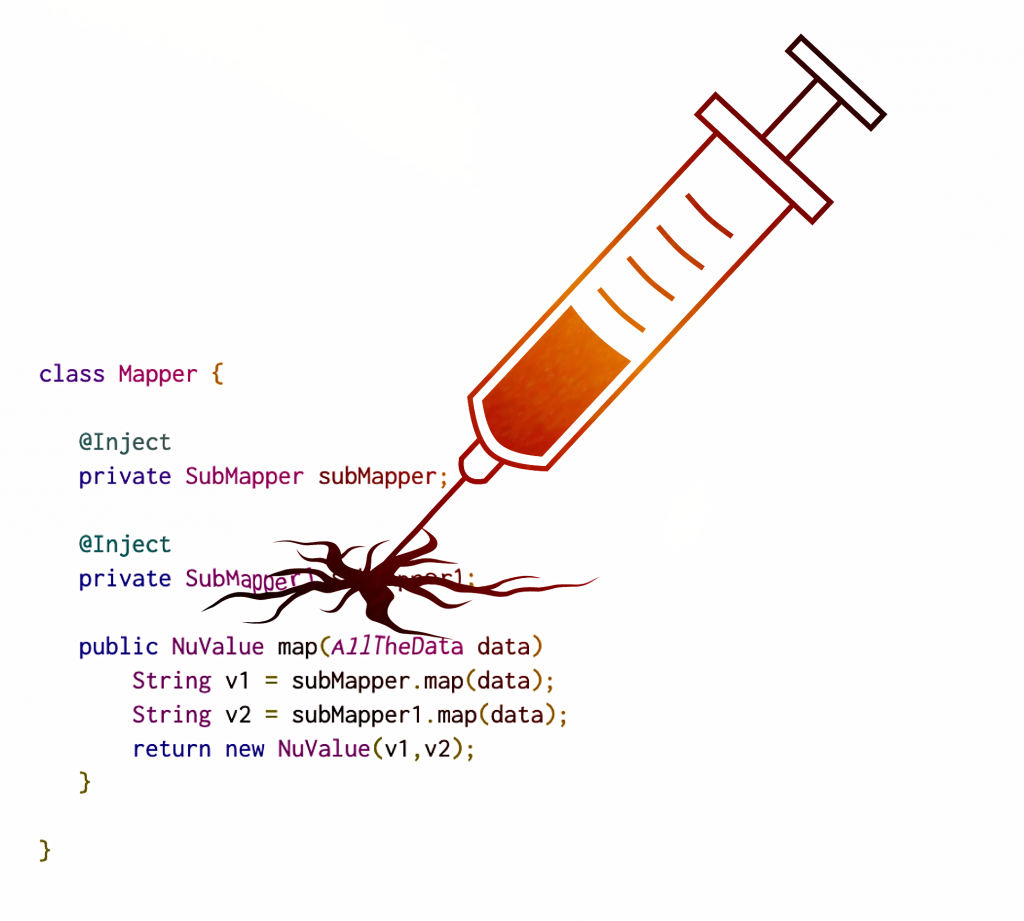

Much of the cause in my opinion lies with Spring. Spring dependency injection can be done by constructor, setter, or field injection. The classes I had trouble with all used field injection. They looked a lot like this.

@Component

class Mapper {

@Inject

private SubMapper subMapper;

@Inject

private SubMapper1 subMapper1;

public NuValue map(AllTheData data)

String v1 = subMapper.map(data);

String v2 = subMapper1.map(data);

return new NuValue(v1,v2);

}

}

The mocking was done because the class itself can not be properly constructed without some magic. The only way to test here is to either fire up Spring (slow) or use something to reach in and set the dependencies. One way is to use Mockito @InjectMocks.

While this partly explains what happened, it does not explain why the value objects were mocked.

I think the value objects were mocked because some of these methods take very large value objects as input. The code under test only reads a very small part of the huge object. This means the test now needs to create that huge value object, which is tedious. So someone came up with mocking the value objects, and rewiring them using Mockito. (This essentially means the value object has been re implemented).

Both these practices seemed to have been adopted as convention. The result was:

- Tests that were clinging to the existing class structure, but that did not test anything useful. Mostly they tested themselves and Mockito. They tried to test some useful cases though, so they could not just be deleted.

- Extremely low development velocity. Every change to one of these classes caused a chain reaction of tests that had to be either hacked so that they compiled, or fully rewritten. Fully rewriting a test often caused other chain reactions of changes that were really hard to manage.

- It took close to half a year to get most of this code in an halfway acceptable shape with decent test coverage.

Most of these classes were refactored to pure functions. The classes were mostly transforming data from one representation to another. There was no need to involve Spring at all.

For some reason whenever I see Spring projects, they all manage way too much using Spring. My hypothesis is that once an annotation based DI framework is in use, using it becomes an alluring path of least resistance. It is so easy to copy an existing class with @Component, and hack from there, instead of thinking about the design.

Closing thoughts

This and other experience has fueled a strong skepticism against annotation based dependency injection frameworks in me. I have found that they are not needed most of the time. They can be used responsibly, but are they worth it? In my experience they create just as many problems as they solve.

To be fair, Spring seems to not use field injection in their documentation anymore. That said, Spring does define what can be done. Field injection at best saves 3-5 lines of code for each dependency, and it is never the right thing to do. Spring should just crash at startup if it detects field injection, that would be sane.

As far as Mockito goes, I think it was mostly innocent in this story, though I do think @InjectMocks should log warnings when it has to break the class contract to add mocks. During all of this, I also had the displeasure to learn what Mockito.RETURNS_DEEP_STUBS does. The documentation has this gem though (A a redeeming factor in my eyes.):

WARNING: This feature should rarely be required for regular clean code! Leave it for legacy code. Mocking a mock to return a mock, to return a mock, (…), to return something meaningful hints at violation of Law of Demeter or mocking a value object (a well known anti-pattern).

Good quote I’ve seen one day on the web: every time a mock returns a mock a fairy dies.